N10-007 Compare and contrast common network vulnerabilities and threats

Attacks/threats

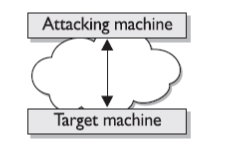

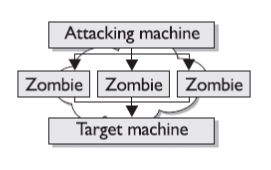

The main purpose of these attacks is to disable and corrupt the network services. This attack crashes the system or makes it too slow to be working efficiently. DOS attacks are very easy to perform and the main target of these attacks is the web servers. The primary purpose of these attacks is to deny access to the device or network by continuously bombarding the network with useless traffic. The DOS attack is started by the attacker by deploying Zombie programs in various computers that have a very high bandwidth. This type of attack is controlled by the Zombie Master who send information to various compromised Zombie computers. In turn after receiving the

information from the Zombie Master the various computers start sending malicious traffic to the target.

Figure 62: DOS attack with a single attacker and a single target

The new DOS attack is the DRDOS which uses various users to send the TCP SYN request or ICMP ping messages to various hosts using a spoofed source address. Hosts replies to these messages with an unsupportable flood of packets aimed at the target.

Figure 63: Zombie Attack

Other well-known DOS attacks are:

TCP SYN flood, Ping of Death, Trinoo, Tribe Flood Network (TFN) and Tribe Flood Network 2000 (TFN2K), Stacheldraht and Trinity.

Viruses

It is a computer code that attaches itself to the other software running on the computer. Thus each time the software opens the virus reproduces itself and keeps on growing. It can crash the computer system or make it too slow to be operated efficiently. Virus requires some human intervention to spread. An Antivirus software is used to keep the system virus free.

Worm

It is a computer program that exploits the vulnerabilities on the computer or network systems to replicate itself. A worm spreads itself by creating duplicates of itself on the system or network. A worm attached to an email can send copies of email to various addresses in the email system’s address books. Examples of worms are Code Red and Nimda.

Attackers

Attackers can be the devices or humans whose purpose is to harm the network or the systems. Attackers attack the network using various techniques such as packet sniffers, port scanners etc. Their main motive is to gain information of the user data or network and destroy files. Some attackers only attack to make the servers or network slow by depleting the servers of their memory. These kind of attacks are called as DOS attacks.

Man-in-the-Middle Attack

It can be thought of a computer between you and the network. It is placed in between to monitor the data you send or receive. In case of man-in-middle attacks the attacker has access to all data that is sent and received from your side. Possible cases are that the middle man can be from the internal of the network or from the ISP end. Man-in-the-middle attacks are usually implemented by using network packet sniffers, routing protocols, or Transport-layer protocols.

The middleman attacker’s goal is any or all of the following:

- Theft of information

- Hijacking of an ongoing session to gain access to your internal network resources

- Traffic analysis to derive information about your network and its users

- Denial of service

- Corruption of transmitted data

- Introduction of new information into network sessions

Smurf Attacks

This kind of attack sends large amount of ICMP (internet control message protocol) echo ping to the ip broadcast address from a valid host. This valid host is traceable and is falsely framed as an attacker. These attacks send Layer 2 (Data Link Layer) broadcasts. As the hosts on the network reply to the icmp echo request with an icmp echo reply the bandwidth is consumed in high amounts resulting in denial of service request to the valid users on the network. For example a smurf attack such as fraggle uses the UDP echo packets in same manner as the icmp echo packets. Fraggle is a simple rewrite of smurf to use a Layer 4 broadcast (Transport Layer). To prevent the smurf attacks the networks should perform filtering either at the end of start of network where customers connect or at the start of network where it connects to the upstream routers. The main goal is to prevent the spoofed source addresses from entering or leaving the network.

Rogue Access Points

It is an unauthorized wireless access point (WAP) installed in the network. Rogue access points pose security threats in the network. Suppose a user in your network installs their own wireless networks and they do not know what dangers they are posing for the networks. If a user has some knowledge and installs wireless networks and turns the SSID broadcasting off. The solution of this problem is to run sniffers that detect wireless.

Phishing

It is a method in which the attacker gives an impression as a trusted source or site and requests your personal information. For example an attacker makes a duplicate site as that of your bank credit card and demands your credit card information which he uses for online purchases etc. The user thinks that the information he/she is providing to the original company but is cheated.

Spoofing

Spoofing is a technique in which the real source of a transmission, file, or email is concealed or replaced with a fake source. This technique enables an attacker, for example, to misrepresent the original source of a file available for download. Then he can trick users into accepting a file from an untrusted source, believing it is coming from a trusted source.

ARP Cache Poisoning

All network devices have an ARP table, a short-term memory of all the IP addresses and MAC addresses the device has already matched together. The ARP table ensures that the device doesn’t have to repeat ARP Requests for devices it has already communicated with.

Here’s an example of a normal ARP communication. Jessica, the receptionist, tells Word to print the latest company contact list. This is her first print job today. Her computer (IP address 192.168.0.16) wants to send the print job to the office’s HP LaserJet printer (IP address 192.168.0.45). So Jessica’s computer broadcasts an ARP Request to the entire local network asking, “Who has the IP address, 192.168.0.45?” as seen in Diagram 1.

All the devices on the network ignore this ARP Request, except for the HP LaserJet printer. The printer recognizes its own IP in the request and sends an ARP Reply: “Hey, my IP address is 192.168.0.45. Here is my MAC address: 00:90:7F:12:DE:7F,” as in Diagram 2.

Now Jessica’s computer knows the printer’s MAC address. It sends the print job to the correct device, and it also associates the printer’s MAC address of 00:90:7F:12:DE:7F with the printer’s IP address of 192.168.0.45 in its ARP table.

The founders of networking probably simplified the communication process for ARP so that it would function efficiently. Unfortunately, this simplicity also leads to major insecurity. Know why my short description of ARP doesn’t mention any sort of authentication method? Because in ARP, there is none.

ARP is very trusting, as in, gullible. When a networked device sends an ARP request, it simply trusts that when the ARP reply comes in, it really does come from the correct device. ARP provides no way to verify that the responding device is really who it says it is. In fact, many operating systems implement ARP so trustingly that devices that have not made an ARP request still accept ARP replies from other devices.

OK, so think like a malicious hacker. You just learned that the ARP protocol has no way of verifying ARP replies. You’ve learned many devices accept ARP replies before even requesting them. Hmmm. Well, why don’t I craft a perfectly valid, yet malicious, ARP reply containing any arbitrary IP and MAC address I choose? Since my victim’s computer will blindly accept the ARP entry into its ARP table, I can force my victim’s gullible computer into thinking any IP is related to any MAC address I want. Better yet, I can broadcast my faked ARP reply to my victim’s entire network and fool all his computers.

Social engineering

Social engineering is a common form of cracking. Both outsiders and people within an organization can use it. Social engineering is a hacker term for tricking people into revealing their password or some form of security information. It might include trying to get users to send passwords or other information over email, shoulder surfing, or any other method that tricks users into divulging information. Social engineering is an attack that attempts to take advantage of human behavior.

Vulnerabilities

Unused Services and Open Ports

A full installation of Red Hat Enterprise Linux contains 1000+ application and library packages. However, most server administrators do not opt to install every single package in the distribution, preferring instead to install a base installation of packages, including several server applications.

A common occurrence among system administrators is to install the operating system without paying attention to what programs are actually being installed. This can be problematic because unneeded services may be installed, configured with the default settings, and possibly turned on. This can cause unwanted services, such as Telnet, DHCP, or DNS, to run on a server or workstation without the administrator realizing it, which in turn can cause unwanted traffic to the server, or even, a potential pathway into the system for crackers.

Unpatched Services

Most server applications that are included in a default installation are solid, thoroughly tested pieces of software. Having been in use in production environments for many years, their code has been thoroughly refined and many of the bugs have been found and fixed.

However, there is no such thing as perfect software and there is always room for further refinement. Moreover, newer software is often not as rigorously tested as one might expect, because of its recent arrival to production environments or because it may not be as popular as other server software.

Developers and system administrators often find exploitable bugs in server applications and publish the information on bug tracking and security-related websites such as the Bugtraq mailing list (http://www.securityfocus.com) or the Computer Emergency Response Team (CERT) website (http://www.cert.org). Although these mechanisms are an effective way of alerting the community to security vulnerabilities, it is up to system administrators to patch their systems promptly. This is particularly true because crackers have access to these same vulnerability tracking services and will use the information to crack unpatched systems whenever they can. Good system administration requires vigilance, constant bug tracking, and proper system maintenance to ensure a more secure computing environment.

Inattentive Administration

Administrators who fail to patch their systems are one of the greatest threats to server security. According to the System Administration Network and Security Institute (SANS), the primary cause of computer security vulnerability is to “assign untrained people to maintain security and provide neither the training nor the time to make it possible to do the job.”[14] This applies as much to inexperienced administrators as it does to overconfident or amotivated administrators.

Some administrators fail to patch their servers and workstations, while others fail to watch log messages from the system kernel or network traffic. Another common error is when default passwords or keys to services are left unchanged. For example, some databases have default administration passwords because the database developers

assume that the system administrator changes these passwords immediately after installation. If a database administrator fails to change this password, even an inexperienced cracker can use a widely-known default password to gain administrative privileges to the database. These are only a few examples of how inattentive administration can lead to compromised servers.

Inherently Insecure Services

Even the most vigilant organization can fall victim to vulnerabilities if the network services they choose are inherently insecure. For instance, there are many services developed under the assumption that they are used over trusted networks; however, this assumption fails as soon as the service becomes available over the Internet — which is itself inherently untrusted.

One category of insecure network services are those that require unencrypted usernames and passwords for authentication. Telnet and FTP are two such services. If packet sniffing software is monitoring traffic between the remote user and such a service usernames and passwords can be easily intercepted.

Inherently, such services can also more easily fall prey to what the security industry terms the man-in-the-middle attack. In this type of attack, a cracker redirects network traffic by tricking a cracked name server on the network to point to his machine instead of the intended server. Once someone opens a remote session to the server, the attacker’s machine acts as an invisible conduit, sitting quietly between the remote service and the unsuspecting user capturing information. In this way a cracker can gather administrative passwords and raw data without the server or the user realizing it.

Another category of insecure services include network file systems and information services such as NFS or NIS, which are developed explicitly for LAN usage but are, unfortunately, extended to include WANs (for remote users). NFS does not, by default, have any authentication or security mechanisms configured to prevent a cracker from mounting the NFS share and accessing anything contained therein. NIS, as well, has vital information that must be known by every computer on a network, including passwords and file permissions, within a plain text ASCII or DBM (ASCII-derived) database. A cracker who gains access to this database can then access every user account on a network, including the administrator’s account.

By default, Red Hat Enterprise Linux is released with all such services turned off. However, since administrators often find themselves forced to use these services, careful configuration is critical.

TELNET

The protocol is defined in RFC 854. It works as a virtual terminal protocol. It permits opening of sessions on a remote host and commands be executed on remote hosts. It was the system used for multiuser environments. It was also preferred for UNIX systems. Today its use is limited to accessing routers and other managed network devices. Security is compromised with Telnet protocol and the primary reason for the protocol to be replaced by SSH.

HTTP

In the HTTP protocol, requests are sent in clear text, which is not always suitable for applications like e-commerce, as it is not a secure method. The solution to this lay in the HTTPS protocol. The HTTPS protocol uses SSL (Secure Socket Layer) for encrypting the information and forwarding the same between the client and the host. The prerequisite for this format to be used is that it should be supported by both the client and the server. The URL in this protocol starts with https

For HTTPS to be used, both the client and server must support it. All popular browsers now support HTTPS, as do web server products, such as Microsoft Internet Information Server (IIS), Apache, and almost all other web server applications that provide sensitive applications. When you are accessing an application that uses HTTPS, the URL starts with https: rather than http:

SLIP

Voice over IP (VoIP) is a convenient and a cheaper option over regular telephones. Voice conversations take place by traveling through IP packets and the internet. For VoIP to work effectively, protocols are required. The protocol that is required is Session Initiation Protocol (SIP). SIP works as an application layer protocol and establishes multimedia sessions. It has the ability to create communication sessions for facilities like conferencing (video as well as audio) online gaming and one to one conversations. It uses TCP or UDP to transport data. When using TCP guaranteed delivery of packets is ensured, whereas with UDP this is not the case.

FTP

It is defined in RFC 959 and is a file transfer protocol. It enables uploading and downloading of files from a host which is remote. The prerequisite for this is it should have FTP server software. Along with this it allows viewing of the contents of the folders, renaming of files, deleting of files, in case the necessary permissions are there. IT uses TCP for transporting packets and hence looks for guaranteed delivery. It supports mechanisms for security which are used to authenticate users. FTP server software can be configured that allows anonymous logons. FTP servers offering files to the general public work by setting up anonymous logons. This makes it a popular choice for distributing files over the internet, for exchange of large files across a LAN. FFTP server capabilities are offered by the common network operating systems. The use of these facilities is a matter of personal choice. Usage of third party utilities like CuteFTP and SmartFTP is also common.

FTP works on the assumption that files that are to be uploaded and downloaded are text (ASCII) files. In case the files are not, the transfer mode has to be changed to binary. With utilities like CuteFTP, which are more sophisticated, the transition between transfer modes is automatic and with utilities which are more basic in nature, the switching has to be done manually. It is an application layer service that is resorted to often. Some of the commands used by command-line FTP client are:

| Command | Purpose of the Command |

| Ls | This command lists the files in the current directory on the remote system. |

| Cd | This command changes the working directory on the remote system. |

| Lcd | This command changes the working directory on the local host. |

| Put | This command uploads a single file to the remote host. |

| Get | This command uploads a single file to the remote host. |

| Mput | This command stands for multiple get and uploading of multiple files to a remote host. |

| Mget | This command downloads multiple files from the remote host. |

| Binary | Switches the transfer into the binary mode. |

| ASCII | Switches the transfers into the default mode, that is, the ASCII mode. |

Table 2: FTP Commands and their Purposes

TFTP

It is defined in RFC 1350. TFTP, a file transfer mechanism, is also a variation of FTP and used for simple downloads. It is neither as secure as FTP nor does it perform the functions at the same level. Unlike FTP which allows traversing across directories, TFTP does not allow this. For using TFTP it becomes necessary to specify the exact location and the request. For transporting data it uses UDP.

SNMPv1 and SNMPv2

When you look at product summaries and specifications for a device, do you consider all versions of SNMP to be the same? Although they might seem quite similar, there are actually some big differences that can get you into trouble if you’re not careful. Imagine how much safer it would be to know the fundamental differences between SNMPv1, SNMPv2c, and SNMPv3.

SNMPv1

SNMPv1 was the first version of SNMP. Although it accomplished its goal of being an open, standard protocol, it was found to be lacking in key areas for certain applications.

SNMPv2C

SNMPv2c is a sub-version of SNMPv2. Its key advantage over previous versions is the Inform command. Unlike Traps, which are simply received by a manager, Informs are positively acknowledged with a response message. If a manager does not reply to an Inform, the SNMP agent will resend the Inform.

Other advantages include:

- improved error handling

- improved SET commands

TEMPEST/RF emanation

TEMPEST is a U.S. government code word that identifies a classified set of standards for limiting electric or electromagnetic radiation emanations from electronic equipment. Microchips, monitors, printers, and all electronic devices emit radiation through the air or through conductors (such as wiring or water pipes). An example is using a kitchen appliance while watching television. The static on your TV screen is emanation caused interference.

During the 1950’s, the government became concerned that emanations could be captured and then reconstructed. Obviously, the emanations from a blender aren’t important, but emanations from an electric encryption device would be. If the emanations were recorded, interpreted, and then played back on a similar device, it would be extremely easy to reveal the content of an encrypted message. Research showed it was possible to capture emanations from a distance, and as a response, the TEMPEST program was started.

The purpose of the program was to introduce standards that would reduce the chances of “leakage” from devices used to process, transmit, or store sensitive information. TEMPEST computers and peripherals (printers, scanners, tape drives, mice, etc.) are used by government agencies and contractors to protect data from emanations monitoring. This is typically done by shielding the device (or sometimes a room or entire building) with copper or other conductive materials. (There are also active measures for “jamming” electromagnetic signals.

Recent Comments